Introduction

So, I've stumbled upon some extra free time lately—surprising, right? Instead of binging on my backlog of games (which, let’s be honest, is tempting), I’ve decided to take on something a little different. I've been writing my own CMS in a language I’m least familiar with: Go. It’s been a wild ride, and along the way, I decided to experiment with using AI to help me write tests for the service code I’ve written. In this article, I’ll walk you through the ups and downs of using AI for testing and how it helped shape my little CMS project, CenariusCMS. Spoiler: I’ve been playing a sort of ping-pong match between AI and myself, and it's been... surprisingly educational.

A Developer’s Perspective: The CMS and the AI

Before we get deep into the code, let me clarify a few things. While I’m a senior software engineer (with some solid experience in C#, Python, and TypeScript), I’m still pretty much a novice in Go. So, working on CenariusCMS—a headless, API-driven content management system—has been an enlightening personal development project, one that's taught me more than I ever imagined. The kind of learning you get from working on your own project sometimes surpasses what you learn on the job.

Now, why use AI for testing? I didn't set out to test via AI because I wanted to dive into the latest buzzword trends. Nope. I had a practical reason: Go is a new frontier for me, and I wanted some backup while creating tests for my template service. And guess what? The AI caught things I didn’t expect, pushing me to rethink and refactor my service code. It’s like having a rubber duck that can also hand you a new test case for your most elusive edge cases.

Diving Into the Code: Template Service in CenariusCMS

Here’s where things got interesting. My template service handles the creation, retrieval, updating, and deletion of content templates. Here’s a look at part of the service:

package template

import (

"context"

"time"

"github.com/go-playground/validator/v10"

"github.com/myproject/pkg/models"

"github.com/myproject/pkg/repositories"

"go.mongodb.org/mongo-driver/bson/primitive"

)

type TemplateService interface {

CreateTemplate(ctx context.Context, template *models.Template) error

GetTemplates(ctx context.Context) ([]models.Template, error)

GetTemplateByID(ctx context.Context, id primitive.ObjectID) (*models.Template, error)

GetTemplateByName(ctx context.Context, name string) (*models.Template, error)

UpdateTemplate(ctx context.Context, id primitive.ObjectID, template *models.Template) error

DeleteTemplate(ctx context.Context, id primitive.ObjectID) error

}

type templateService struct {

repository repositories.TemplateRepository

validate *validator.Validate

}

func NewTemplateService(templateRepo repositories.TemplateRepository) TemplateService {

return &templateService{

repository: templateRepo,

validate: validator.New(),

}

}

// CreateTemplate logic with validation and auto-generated fields

func (s *templateService) CreateTemplate(ctx context.Context, template *models.Template) error {

template.ID = primitive.NewObjectID()

now := time.Now().UTC()

template.CreatedAt = now

template.UpdatedAt = now

if err := s.validate.Struct(template); err != nil {

return err

}

return s.repository.CreateTemplate(ctx, template)

}

// Other methods: GetTemplates, UpdateTemplate, DeleteTemplate

The service seemed robust, but the key word here is seemed. I needed thorough tests to ensure it actually worked as expected—especially when handling all sorts of edge cases that I might overlook, like missing required fields or strange combinations of input data. That’s where AI entered the picture.

AI: Not a Magic Wand, But a Powerful Tool

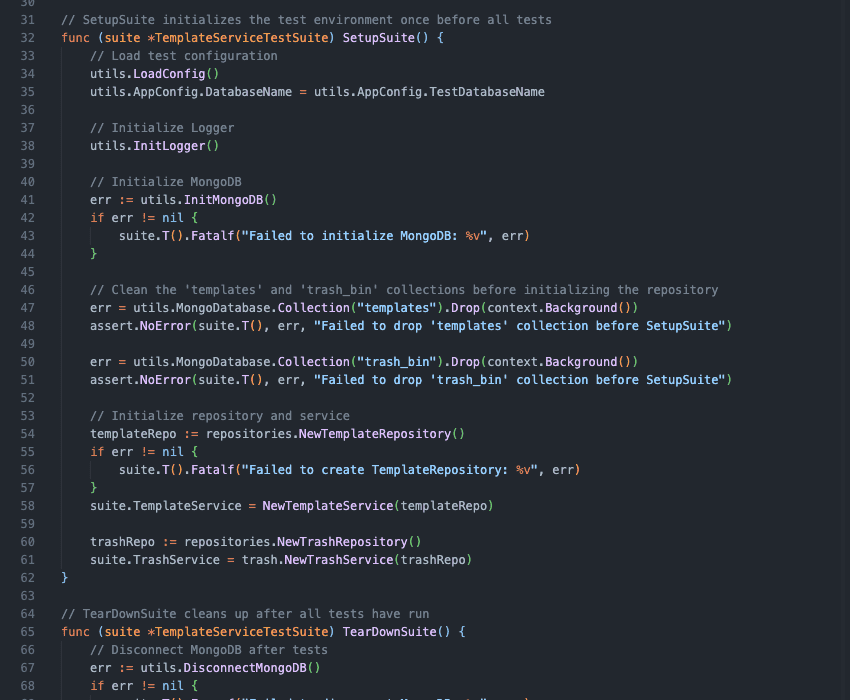

I was genuinely curious: How would AI-generated tests fare against this service? Could it handle all the nitty-gritty details like validation errors, missing fields, and handling MongoDB IDs? The initial results were pretty good, actually—about 75% of what I needed. Here’s a basic structure of a test case it generated:

func (suite *TemplateServiceTestSuite) TestCreateTemplate() {

template := models.Template{

Name: "ServiceTestTemplate",

Fields: []models.FieldDefinition{

{Name: "Title", Type: "text", Required: true},

{Name: "Description", Type: "longtext", Required: false, Default: "Default Description"},

},

}

err := suite.TemplateService.CreateTemplate(context.Background(), &template)

assert.NoError(suite.T(), err, "Expected no error on template creation")

insertedTemplate, err := suite.TemplateService.GetTemplateByID(context.Background(), template.ID)

assert.NoError(suite.T(), err, "Expected no error retrieving the template")

assert.NotNil(suite.T(), insertedTemplate, "Expected the inserted template to exist")

}

This worked! But… there was more to it than just running the tests AI created.

The AI Ping-Pong: Back and Forth with Edge Cases

Let’s be clear: AI doesn’t replace human thinking, especially when it comes to the nuances of code. The initial test batch exposed some flaws in my template service—for example, missing validations and improper handling of empty fields. But after fixing these issues, I had to rethink the tests. The AI didn’t generate every edge case, so I stepped in to fill the gaps.

Once I manually added some extra edge cases, I bounced it back to the AI. It came up with more scenarios I hadn’t considered, like testing how the service behaves when creating templates with duplicate names or missing field types. Check this out:

func (suite *TemplateServiceTestSuite) TestCreateTemplateMissingName() {

invalidTemplate := models.Template{

Fields: []models.FieldDefinition{

{Name: "Title", Type: "text", Required: true},

},

}

err := suite.TemplateService.CreateTemplate(context.Background(), &invalidTemplate)

assert.Error(suite.T(), err, "Expected validation error for missing 'Name'")

}

By working this way, I strengthened both my code and test coverage, discovering scenarios that would have slipped by if I were flying solo.

The AI Brain Farts: When Context Gets Lost

One thing I learned quickly: AI sometimes loses track of the context, especially when you work on complex prompts or multi-step problems. For example, I’d ask the AI to generate tests for a specific scenario, and it would circle back to things we’d already solved, like revalidating fields that had already been tested. At one point, I had to tell the AI to “stop hallucinating” and just provide simple implementations without rehashing old issues.

Here's a moment when the AI went off-track:

// After already testing for valid field types...

func (suite *TemplateServiceTestSuite) TestUpdateTemplateWithInvalidType() {

// It wanted to test invalid types again, which was redundant

updatedTemplate := models.Template{

Fields: []models.FieldDefinition{

{Name: "Content", Type: "invalid_type", Required: true},

},

}

err := suite.TemplateService.UpdateTemplate(context.Background(), template.ID, &updatedTemplate)

assert.Error(suite.T(), err, "Expected validation error for invalid field type")

}

While the redundancy was a bit annoying, telling the AI to keep it brief and to the point helped manage the noise. You can still extract valuable test cases from the AI; just know it requires a little extra hand-holding.

The Pros of Using AI for Testing

1. Speed and Efficiency: AI-generated tests gave me a great starting point quickly. Instead of writing boilerplate test cases myself, the AI covered much of the basic structure, letting me focus on refining the service code and edge cases.

2. Idea Generator: There were moments when the AI suggested test cases that I hadn’t thought of. These surprise scenarios helped me anticipate issues earlier, such as handling duplicate templates or invalid data types.

3. Code Cleanup: The AI refactored my tests at one point, consolidating multiple test cases into one. This not only made the test suite cleaner but also easier to maintain. It felt like having a second pair of eyes on the code—sometimes, the AI pointed out patterns that were ripe for consolidation.

The Cons of Using AI for Testing

1. Context Loss: The AI struggled with remembering the full scope of the project, especially when it came to more nuanced logic. I had to regularly remind it not to repeat old tests, which ate into the time I saved by using it.

2. Lack of Deep Understanding: While AI can generate the “what” of tests, it doesn’t always grasp the “why” behind certain decisions. This meant I had to carefully review and tweak most AI-generated tests to ensure they aligned with the service’s true functionality.

3. Prompt Tuning: Getting the AI to generate exactly what I needed required multiple iterations and prompt refinements. It’s not a set-it-and-forget-it solution—more like a collaborative process where you have to guide it.

Conclusion: The Sweet Spot Between AI and Human

Using AI for testing isn’t a magic solution, but it’s a powerful tool. It helped me accelerate the testing process for CenariusCMS, allowing me to cover more cases than I probably would have on my own. However, it’s not perfect. You still have to think critically about your test cases, revise AI-generated output, and, sometimes, fill in gaps where AI falls short. AI can handle the boilerplate and suggest unexpected edge cases, but human oversight is essential to ensure the tests align with real-world scenarios. In the end, the combination of AI speed and human intuition creates a balance that can improve both your code and your development process.